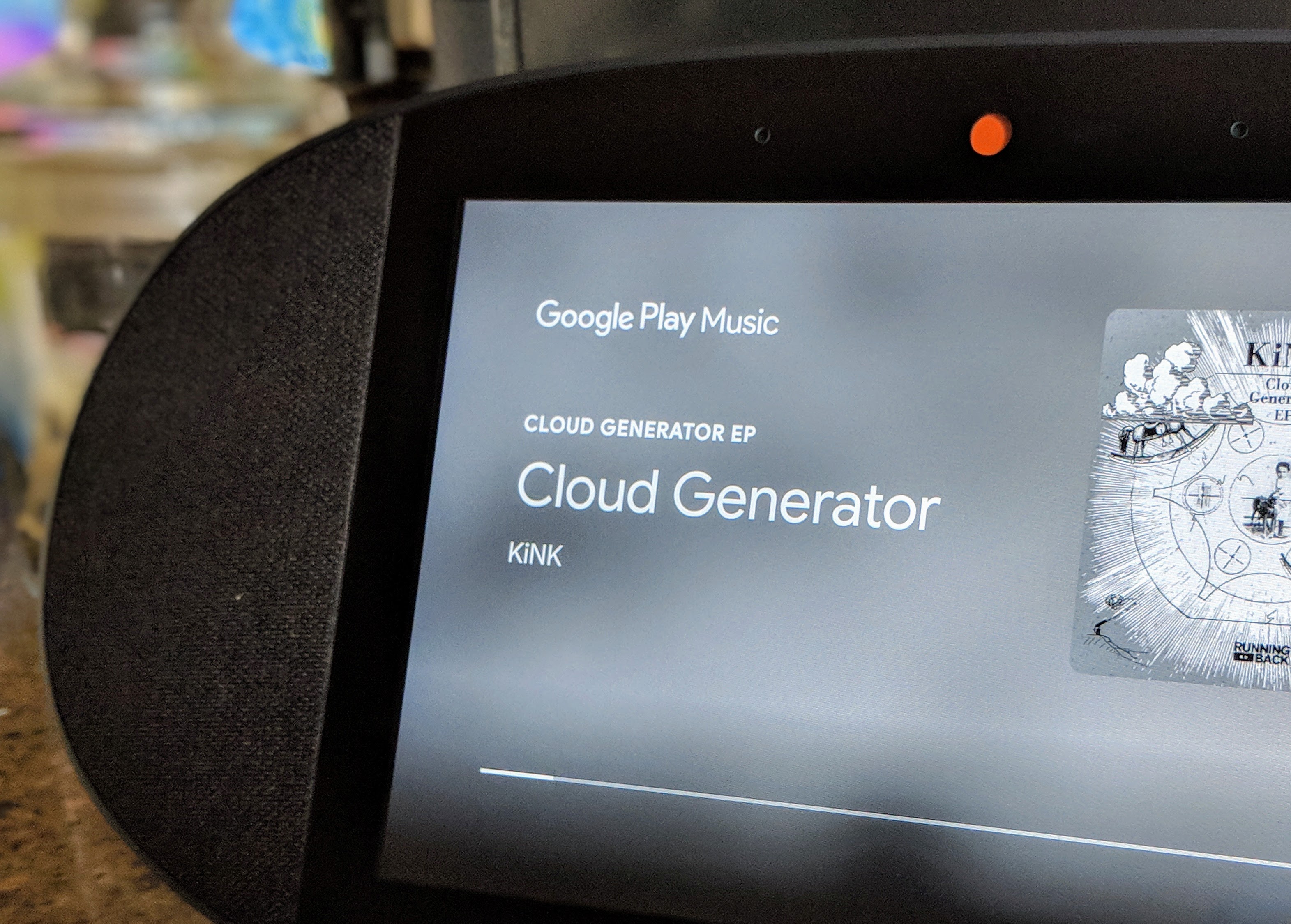

If you’re looking for a smart display that’s powered by the Google Assistant, you now have two choices: the Lenovo Smart Display and the JBL Link View. Lenovo was first out of the gate with its surprisingly stylish gadget, but it also left room for improvement. JBL, given its heritage as an audio company, is putting the emphasis on sound quality, with stereo speakers and a surprising amount of bass.

In terms of the overall design, the Link View isn’t going to win any prizes, but its pill shape definitely isn’t ugly either. JBL makes the Link View in any color you like, as long as that’s black. It’ll likely fit in with your home decor, though.

The Link View has an 8-inch high-definition touchscreen that is more than crisp enough for the maps, photos and YouTube videos you’ll play on it. In using it for the last two weeks, the screen turned out to be a bit of a fingerprint magnet, but you’d expect that given that I put it on the kitchen counter and regularly used it to entertain myself while waiting for the water to boil.

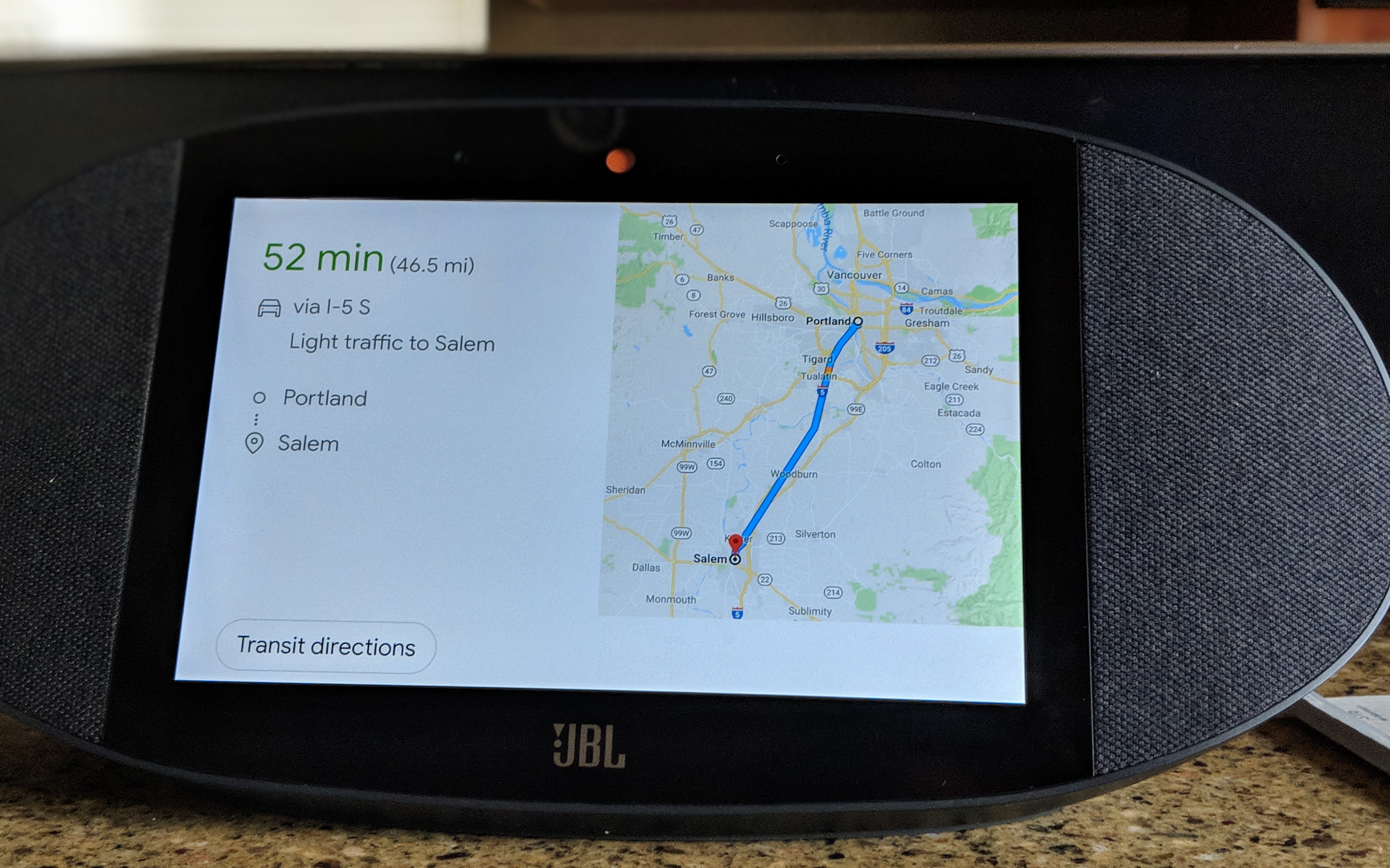

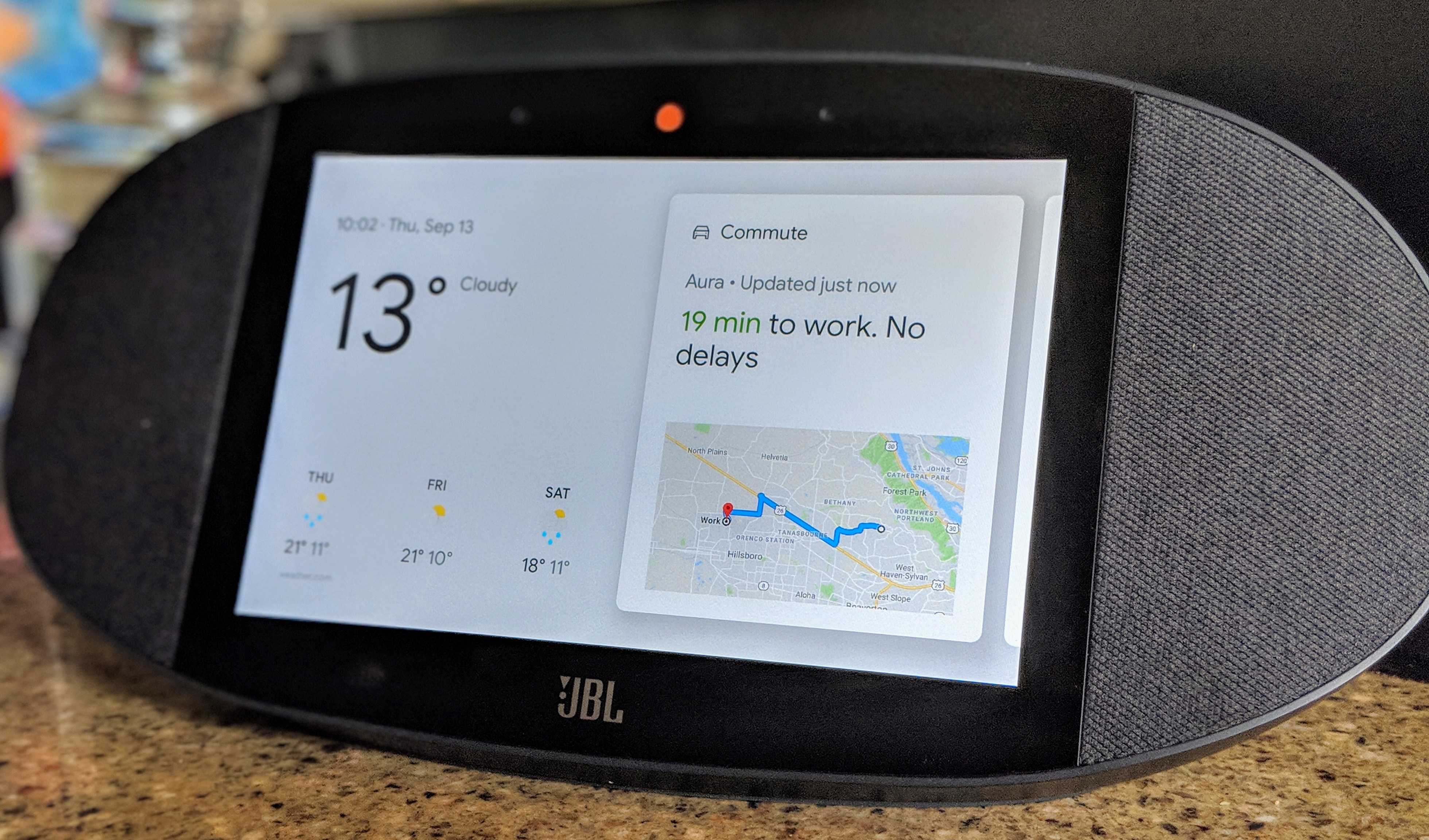

At the end of the day, you’re not going to spend $250 on a nice speaker with a built-in tablet. What matters most here is whether the visual side of the Google Assistant works for you. I find that it adds an extra dimension to the audio responses, no matter whether that’s weather reports, a map of my daily commute (which can change depending on traffic) or a video news report. Google’s interface for these devices is simple and clear, with large buttons and clearly presented information. And maybe that’s no surprise. These smart speakers are the ideal surface for its Material Design language, after all.

As a demo, Google likes to talk about how these gadgets can help you while cooking, with step-by-step recipes and videos. I find that this is a nice demo indeed, and thought that it would help me get a bit more creative with trying new recipes. In reality, though, I never have the ingredients I need to cook what Google suggests. If you are a better meal planner than I am, your mileage will likely vary.

What I find surprisingly useful is the display’s integration of Google Duo. I’m aware that the Allo/Duo combo is a bit of a flop, but the display does make you want to use Duo because you can easily have a video chat while just doing your thing in the kitchen. If you set up multiple users, the display can even receive calls for all of them. And don’t worry, there is a physical slider you can use to shut down the camera whenever you want.

The Link View also made me appreciate Google’s Assistant routines more (and my colleague Lucas Matney found the same when he tried out the Lenovo Smart Display). And it’s just a bit easier to look at the weather graphics instead of having the Assistant rattle off the temperature for the next couple of days.

Maybe the biggest letdown, though (and this isn’t JBL’s, fault but a feature Google needs to enable) is that you can’t add a smart display to your Google Assistant groups. That means you can’t use it as part of your all-house Google Home audio system, for example. It’s an odd omission for sure, given the Link View’s focus on sound, but my understanding is that the same holds true for the Lenovo Smart Display. If this is a deal breaker for you, then I’d hold off on buying a Google Assistant smart display for the time being.

You can, however, use the display as a Chromecast receiver to play music from your phone or watch videos. While you are not using it, the display can show the current time or simply go to blank.

Another thing that doesn’t work on smart displays yet is Google’s continued “conversation feature,” which lets you add a second command without having to say “OK, Google” again. For now, the smart displays only work in English, too.

When I first heard about these smart displays, I wasn’t sure if they were going to be useful. Turns out, they are. I do live in the Google Assistant ecosystem, though, and I’ve got a few Google Homes set up around my house. If you’re looking to expand your Assistant setup, then the Link View is a nice addition — and if you’re just getting started (or only need one Assistant-enabled speaker/display), then opting for a smart display over a smart speaker may just be the way to go, assuming you can stomach the extra cost.

from Android – TechCrunch https://ift.tt/2xamwO3

via IFTTT

There are a lot of iPhones out there, to be sure. But defining the iPhone as some sort of decade-long continuous camera, which Apple seems to be doing, is sort of a disingenuous way to do it. By that standard, Samsung would almost certainly be ahead, since it would be allowed to count all its Galaxy phones going back a decade as well, and they’ve definitely outsold Apple in that time. Going further, if you were to say that a basic off-the-shelf camera stack and common Sony or Samsung sensor was a “camera,” iPhone would probably be outnumbered 10:1 by Android phones.

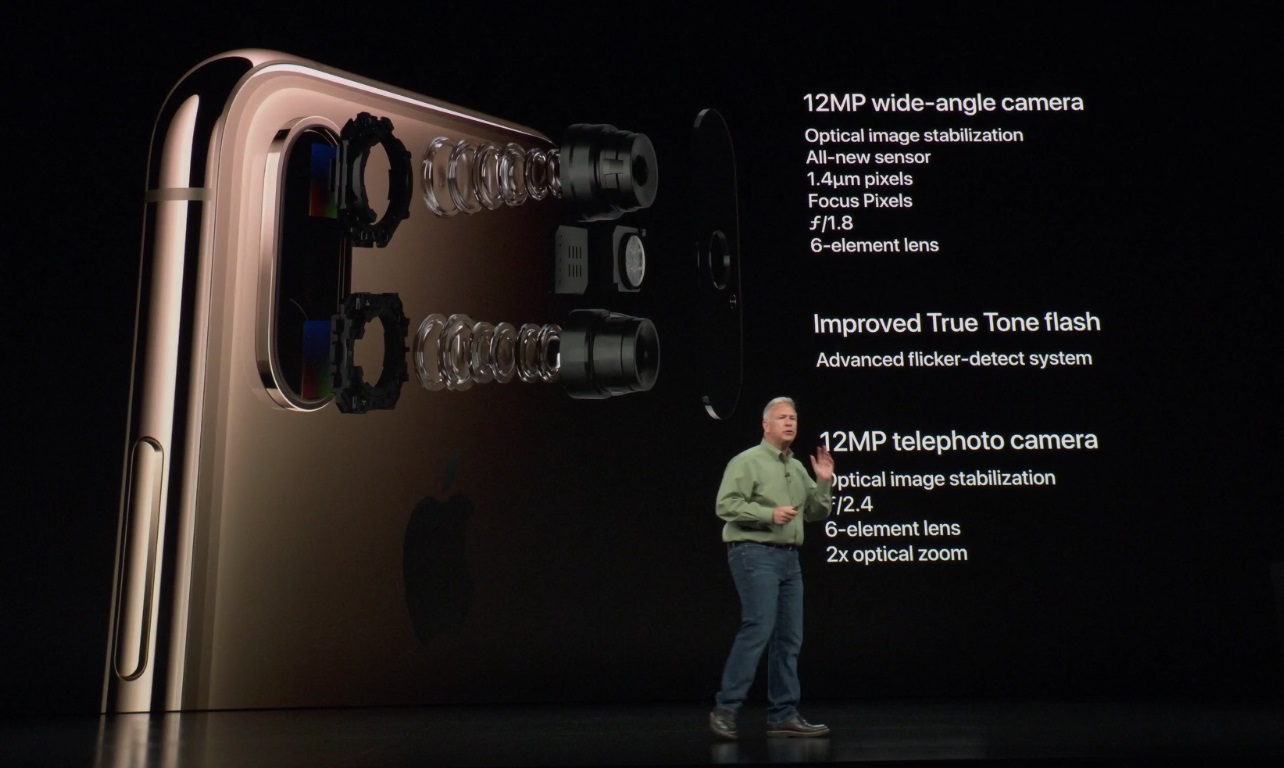

There are a lot of iPhones out there, to be sure. But defining the iPhone as some sort of decade-long continuous camera, which Apple seems to be doing, is sort of a disingenuous way to do it. By that standard, Samsung would almost certainly be ahead, since it would be allowed to count all its Galaxy phones going back a decade as well, and they’ve definitely outsold Apple in that time. Going further, if you were to say that a basic off-the-shelf camera stack and common Sony or Samsung sensor was a “camera,” iPhone would probably be outnumbered 10:1 by Android phones. As Phil would explain later, a lot of the newness comes from improvements to the sensor and image processor. But as he said that the system was new while backed by an exploded view of the camera hardware, we may consider him as referring to that as well.

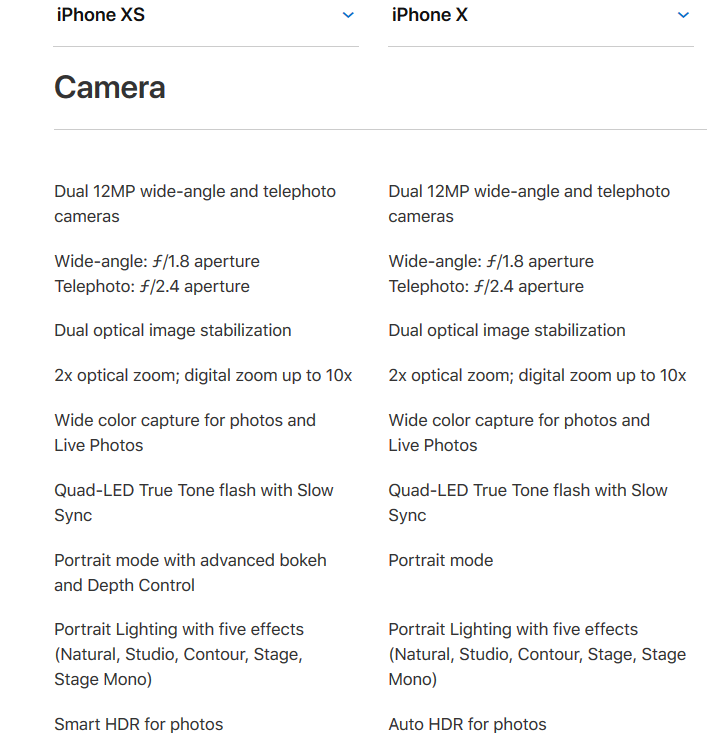

As Phil would explain later, a lot of the newness comes from improvements to the sensor and image processor. But as he said that the system was new while backed by an exploded view of the camera hardware, we may consider him as referring to that as well. If I said these were different cameras, would you believe me? Same F numbers, no reason to think the image stabilization is different or better, and so on. It would not be unreasonable to guess that these are, as far as optics, the same cameras as before. Again, not that there was anything wrong with them — they’re fabulous optics. But showing components that are in fact the same and saying it’s different is misleading.

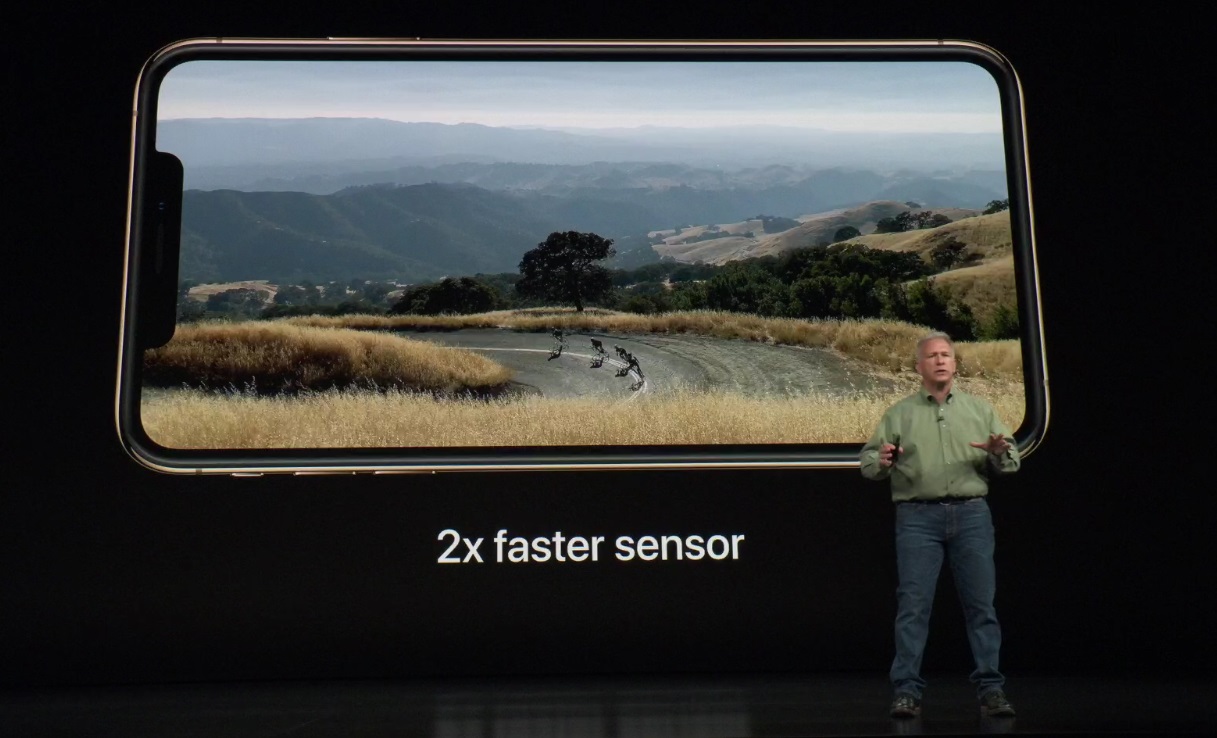

If I said these were different cameras, would you believe me? Same F numbers, no reason to think the image stabilization is different or better, and so on. It would not be unreasonable to guess that these are, as far as optics, the same cameras as before. Again, not that there was anything wrong with them — they’re fabulous optics. But showing components that are in fact the same and saying it’s different is misleading. It’s not really clear what is meant when he says this. “To take advantage of all this technology.” Is it the readout rate? Is it the processor that’s faster, since that’s what would probably produce better image quality (more horsepower to calculate colors, encode better, and so on)? “Fast” also refers to light-gathering — is that faster?

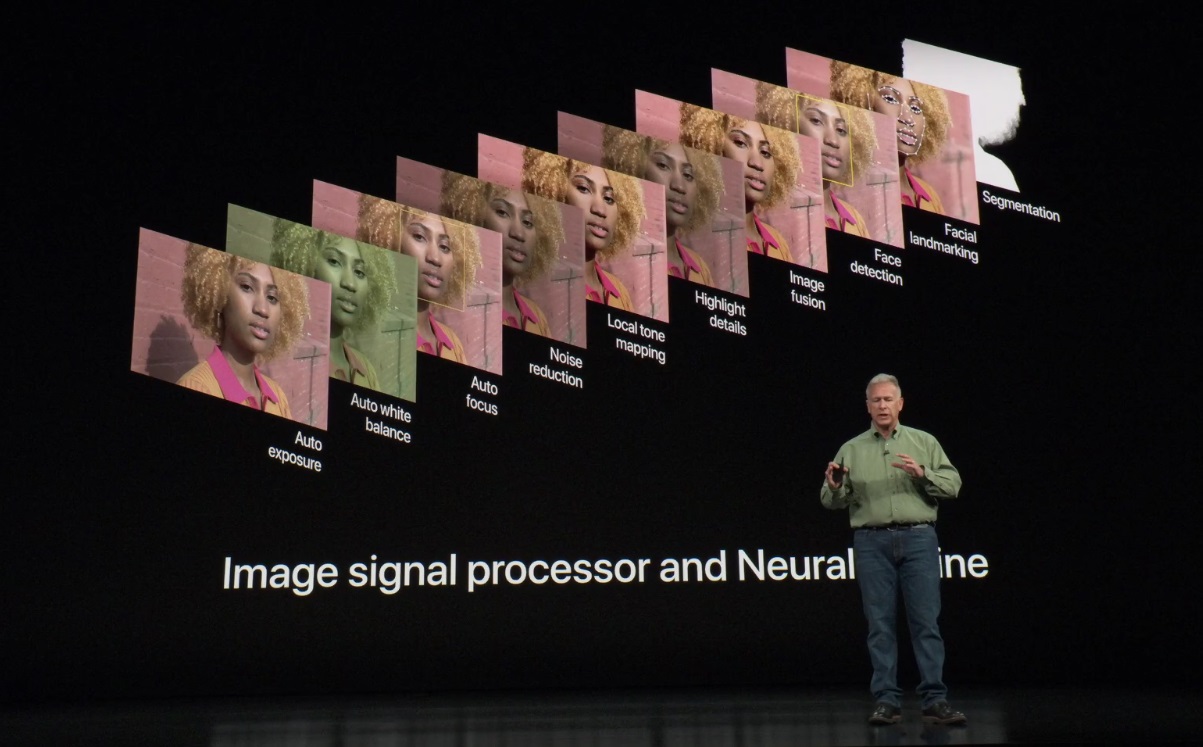

It’s not really clear what is meant when he says this. “To take advantage of all this technology.” Is it the readout rate? Is it the processor that’s faster, since that’s what would probably produce better image quality (more horsepower to calculate colors, encode better, and so on)? “Fast” also refers to light-gathering — is that faster? Now, this was a bit of sleight of hand on Phil’s part. Presumably what’s new is that Apple has better integrated the image processing pathway between the traditional image processor, which is doing the workhorse stuff like autofocus and color, and the “neural engine,” which is doing face detection.

Now, this was a bit of sleight of hand on Phil’s part. Presumably what’s new is that Apple has better integrated the image processing pathway between the traditional image processor, which is doing the workhorse stuff like autofocus and color, and the “neural engine,” which is doing face detection. Apple’s brand new feature has been on Google’s Pixel phones for a while now. A lot of cameras now keep a frame buffer going, essentially snapping pictures in the background while the app is open, then using the latest one when you hit the button. And Google, among others, had the idea that you could use these unseen pictures as raw material for an HDR shot.

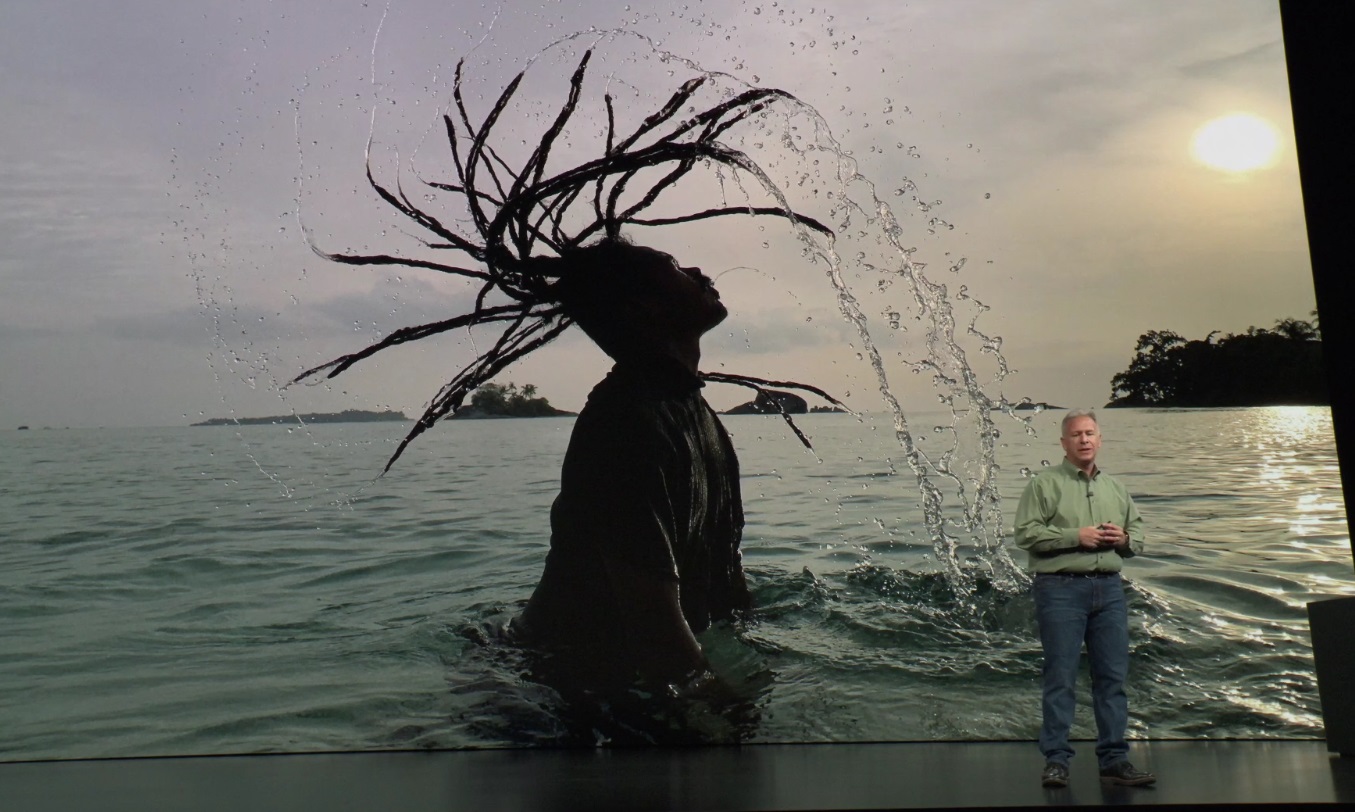

Apple’s brand new feature has been on Google’s Pixel phones for a while now. A lot of cameras now keep a frame buffer going, essentially snapping pictures in the background while the app is open, then using the latest one when you hit the button. And Google, among others, had the idea that you could use these unseen pictures as raw material for an HDR shot. I’m not saying you should shoot directly into the sun, but it’s really not uncommon to include the sun in your shot. In the corner like that it can make for some cool lens flares, for instance. It won’t blow out these days because almost every camera’s auto-exposure algorithms are either center-weighted or intelligently shift around — to find faces, for instance.

I’m not saying you should shoot directly into the sun, but it’s really not uncommon to include the sun in your shot. In the corner like that it can make for some cool lens flares, for instance. It won’t blow out these days because almost every camera’s auto-exposure algorithms are either center-weighted or intelligently shift around — to find faces, for instance. This just isn’t true. You can do this on the Galaxy S9, and it’s being rolled out in Google Photos as well. Lytro was doing something like it years and years ago, if we’re including “any type of camera.” I feel kind of bad that no one told Phil. He’s out here without the facts.

This just isn’t true. You can do this on the Galaxy S9, and it’s being rolled out in Google Photos as well. Lytro was doing something like it years and years ago, if we’re including “any type of camera.” I feel kind of bad that no one told Phil. He’s out here without the facts.