Facebook and Google landed in hot water with Apple this week after two investigations by TechCrunch revealed the misuse of internal-only certificates — leading to their revocation, which led to a day of downtime at the two tech giants.

Confused about what happened? Here’s everything you need to know.

How did all this start, and what happened?

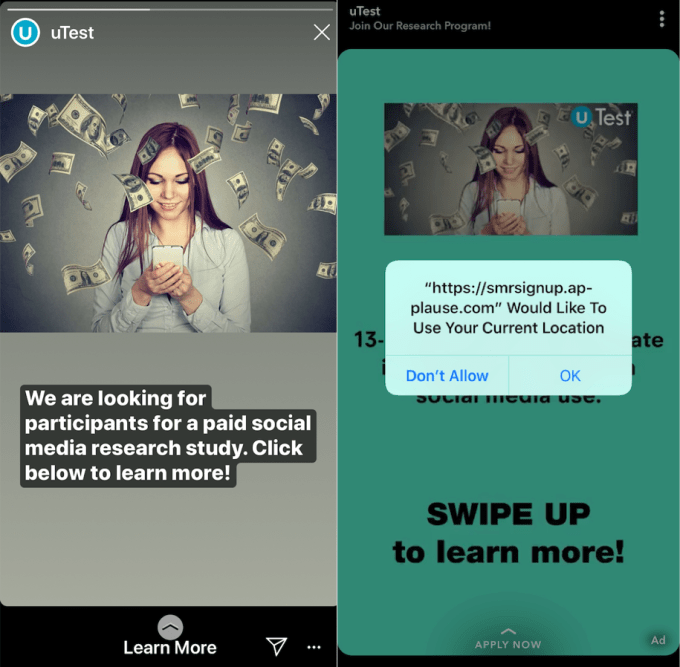

On Monday, we revealed that Facebook was misusing an Apple-issued certificate that is only meant for companies to use to distribute internal, employee-only apps without having to go through the Apple App Store. But the social media giant used that certificate to sign an app that Facebook distributed outside the company, violating Apple’s rules.

The app, known simply as “Research,” allowed Facebook access to all the data flowing out of the device it was installed on. Facebook paid users — including teenagers — $20 per month to install the app. But it wasn’t clear exactly what kind of data was being vacuumed up, or for what reason.

It turns out that the app was a repackaged app that was effectively banned from Apple’s App Store last year for collecting too much data on users.

Apple was angry that Facebook was misusing its special-issue certificates to push an app it already banned, and revoked it — rendering the app useless. But Facebook was using that same certificate to sign its other employee-only apps, effectively knocking them offline until Apple re-issued the certificate.

Then, it turned out Google was doing almost exactly the same thing with its Screenwise app, and Apple’s ban-hammer fell again.

What’s the controversy over these certificates and what can they do?

If you want to develop Apple apps, you have to abide by its rules.

A key rule is that Apple doesn’t allow app developers to bypass the App Store, where every app is vetted to ensure it’s as secure as it can be. It does, however, grant exceptions for enterprise developers, such as to companies that want to build apps that are only used internally by employees. Facebook and Google in this case signed up to be enterprise developers and agreed to Apple’s developer terms.

Apple granted each a certificate that grants permission to distribute apps they develop internally — including pre-release versions of the apps they make, for testing purposes. But these certificates aren’t allowed to be used for ordinary consumers, as they have to download apps through the App Store.

Why is “root” certificate access a big deal?

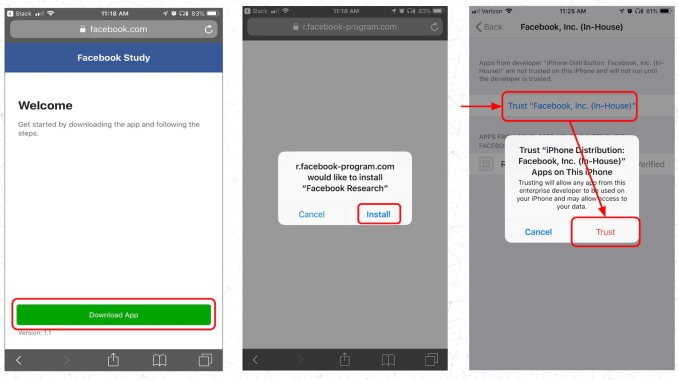

Because Facebook’s Research and Google’s Screenwise apps were distributed outside of Apple’s App Store, it required users to manually install the app — known as sideloading. That requires users to go through a convoluted few steps of downloading the app itself, and opening and installing either Facebook or Google’s certificate.

Both apps then required users to open another certificate — known as a VPN configuration profile — allowing all of the data flowing out of that user’s phone to funnel down a special tunnel that directs it all to either Facebook or Google, depending on the app you installed.

This is where Facebook and Google’s cases differ.

Google’s app collected data and sent it off to Google for research purposes, but couldn’t access encrypted data — such as iMessages, or other end-to-end encrypted content.

Facebook, however, went far further. Its users were asked to go through an additional step to trust the certificate at the “root” level of the phone. Trusting this “root certificate” allowed Facebook to look at all of the encrypted traffic flowing out of the device — essentially what we call a “man-in-the-middle” attack. That allowed Facebook to sift through your messages, your emails, and any other bit of data that leaves your phone. Only apps that use certificate pinning — which reject any certificate that isn’t its own — were protected.

Facebook’s Research app requires Root Certificate access, which Facebook gather almost any piece of data transmitted by your phone. (Image: supplied)

Google’s app might not have been able to look at encrypted traffic, but the company still flouted the rules and got its certificate revoked anyway.

What data did Facebook have access to on iOS?

It’s hard to know for sure, but it definitely had access to more data than Google.

Facebook said its app was to help it “understand how people use their mobile devices.” In reality, at root traffic level, Facebook could have accessed any kind of data that left your phone.

Will Strafach, a security expert who we spoke to for our story, said: “If Facebook makes full use of the level of access they are given by asking users to install the certificate, they will have the ability to continuously collect the following types of data: private messages in social media apps, chats from in instant messaging apps – including photos/videos sent to others, emails, web searches, web browsing activity, and even ongoing location information by tapping into the feeds of any location tracking apps you may have installed.”

Remember: this isn’t “root” access to your phone, like jailbreaking, but root access to the network traffic.

How does this compare to the technical ways other market research programs work?

In fairness, these aren’t market research apps unique to Facebook or Google. Several other companies, like Nielsen and comScore, run similar programs, but neither ask users to install a VPN or provide root access to the network.

In any case, Facebook already has a lot of your data — as does Google. Even if the companies only wanted to look at your data in aggregate with other people, it can still hone in on who you talk to, when, for how long, and in some cases what about. It might not have been such an explosive scandal had Facebook not spent the last year cleaning up after several security and privacy breaches.

Can they capture the data of people the phone owner interacts with?

In both cases, yes. In Google’s case, any unencrypted data that involves another person’s data could have been collected. In Facebook’s case, it goes far further — any data of yours that interacts with another person, such as an email or a message, could have been collected by Facebook’s app.

How many people did this affect?

It’s hard to know for sure. Neither Google nor Facebook have said how many users they have. Between them, it’s believed to be in the thousands. As for the employees affected by the app outages, Facebook has more than 35,000 employees and Google has more than 94,000 employees.

Why did internal apps at Facebook and Google break after Apple revoked the certificates?

You might own your Apple device, but Apple still gets to control what goes on it.

After Facebook was caught out, Apple said: “Any developer using their enterprise certificates to distribute apps to consumers will have their certificates revoked, which is what we did in this case to protect our users and their data.” That meant any app that relied on the certificate — including inside the company — would fail to load. That’s not just pre-release builds of Facebook, Instagram and WhatsApp that staff were working on, but reportedly the company’s travel and collaboration apps were down. In Google’s case, even its catering and lunch menu apps were down.

Facebook’s internal apps were down for about a day, while Google’s internal apps were down for a few hours. None of Facebook or Google’s consumer services were affected, however.

How are people viewing Apple in all this?

Nobody seems thrilled with Facebook or Google at the moment, but not many are happy with Apple, either. Even though Apple sells hardware and doesn’t use your data to profile you or serve you ads — like Facebook and Google do — some are uncomfortable with how much power Apple has over the customers — and enterprises — that use its devices.

In revoking Facebook and Google’s enterprise certificates and causing downtime, it has a knock-on effect internally.

Is this legal in the U.S.? What about in Europe with GDPR?

Well, it’s not illegal — at least in the U.S. Facebook says it gained consent from its users. The company even said its teenage users must obtain parental consent, even though it was easily skippable and no verification checks were made. It wasn’t even explicitly clear that the children who “consented” really understood how much privacy they were really handing over.

That could lead to major regulatory headaches down the line. “If it turns out that European teens have been participating in the research effort Facebook could face another barrage of complaints under the bloc’s General Data Protection Regulation (GDPR) — and the prospect of substantial fines if any local agencies determine it failed to live up to consent and ‘privacy by design’ requirements baked into the bloc’s privacy regime,” wrote TechCrunch’s Natasha Lomas.

Who else have been misusing certificates?

Don’t think that Facebook and Google are alone in this. It turns out that a lot of companies might be flouting the rules, too.

According to many finding companies on social media, Sonos uses enterprise certificates for its beta program, as does finance app Binance, as well as DoorDash for its fleet of contractors. It’s not known if Apple will also revoke their certificates.

What next?

It’s anybody’s guess, but don’t expect this situation to die down any time soon.

Facebook may face repercussions with Europe, as well as at home. Two U.S. senators, Mark Warner and Richard Blumenthal, have already called for action, accusing Facebook of “wiretapping teens.” The Federal Trade Commission may also investigate, if Blumenthal gets his way.

from Apple – TechCrunch https://tcrn.ch/2HLKWoY