Back in 2015, Google’s ATAP team demoed a new kind of wearable tech at Google I/O that used functional fabrics and conductive yarns to allow you to interact with your clothing and, by extension, the phone in your pocket. The company then released a jacket with Levi’s in 2017, but that was expensive, at $350, and never really quite caught on. Now, however, Jacquard is back. A few weeks ago, Saint Laurent launched a backpack with Jacquard support, but at $1,000, that was very much a luxury product. Today, however, Google and Levi’s are announcing their latest collaboration: Jacquard-enabled versions of Levi’s Trucker Jacket.

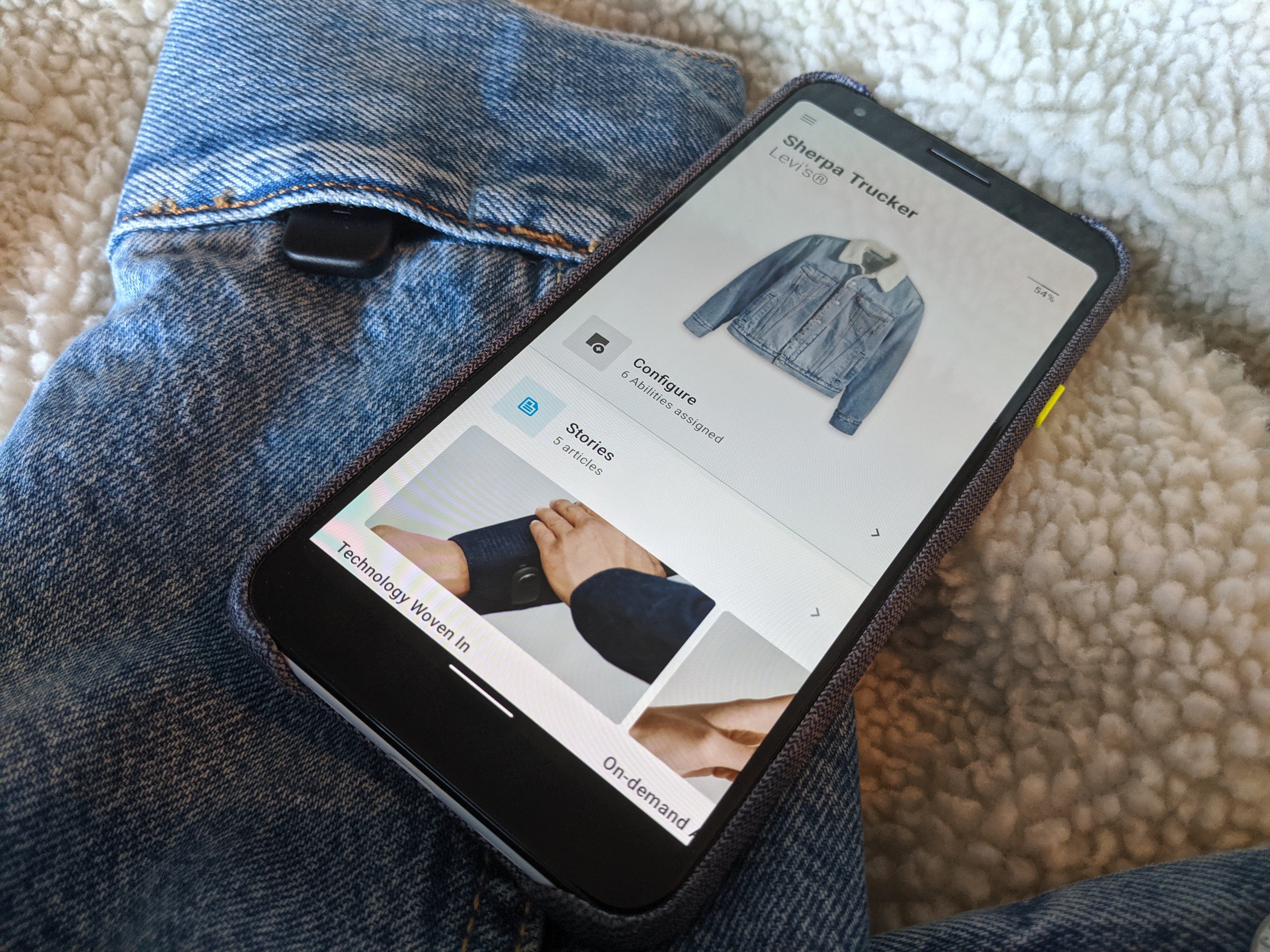

These jackets, which will come in different styles, including the Classic Trucker and the Sherpa Trucker, and in men’s and women’s versions, will retail for $198 for the Classic Trucker and $248 for the Sherpa Trucker. In addition to the U.S., it’ll be available in Australia, France, Germany, Italy, Japan and the U.K.

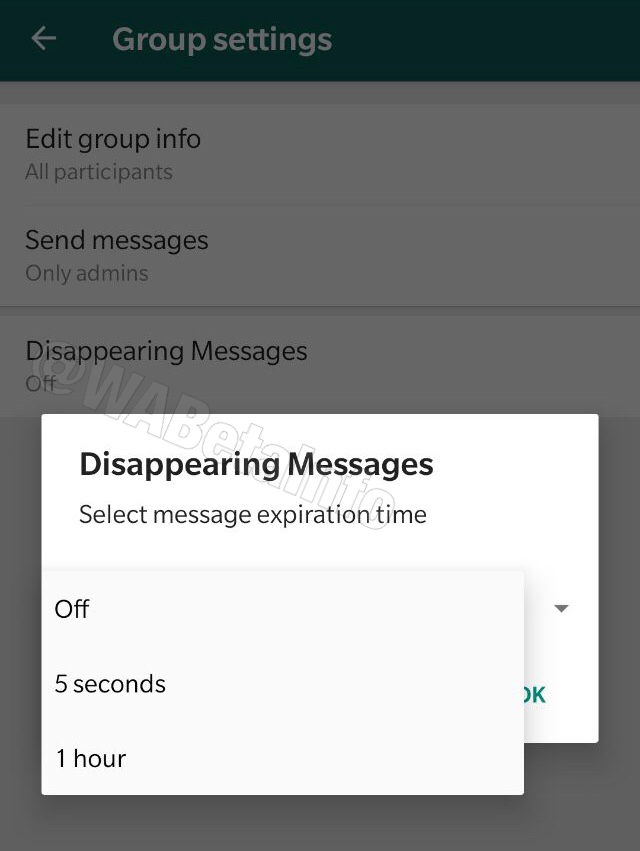

The idea here is simple and hasn’t changed since the original launch: a dongle in your jacket’s cuff connects to conductive yarns in your jacket. You can then swipe over your cuff, tap it or hold your hand over it to issue commands to your phone. You use the Jacquard phone app for iOS or Android to set up what each gesture does, with commands ranging from saving your location to bringing up the Google Assistant in your headphones, from skipping to the next song to controlling your camera for selfies or simply counting things during the day, like the coffees you drink on the go. If you have Bose noise-canceling headphones, the app also lets you set a gesture to turn your noise cancellation on or off. In total, there are currently 19 abilities available, and the dongle also includes a vibration motor for notifications.

What’s maybe most important, though, is that this (re-)launch sets up Jacquard as a more modular technology that Google and its partners hope will take it from a bit of a gimmick to something you’ll see in more places over the next few months and years.

“Since we launched the first product with Levi’s at the end of 2017, we were focused on trying to understand and working really hard on how we can take the technology from a single product […] to create a real technology platform that can be used by multiple brands and by multiple collaborators,” Ivan Poupyrev, the head of Jacquard by Google told me. He noted that the idea behind projects like Jacquard is to take things we use every day, like backpacks, jackets and shoes, and make them better with technology. He argued that, for the most part, technology hasn’t really been added to these things that we use every day. He wants to work with companies like Levi’s to “give people the opportunity to create new digital touchpoints to their digital life through things they already have and own and use every day.”

What’s also important about Jacquard 2.0 is that you can take the dongle from garment to garment. For the original jacket, the dongle only worked with this one specific type of jacket; now, you’ll be able to take it with you and use it in other wearables as well. The dongle, too, is significantly smaller and more powerful. It also now has more memory to support multiple products. Yet, in my own testing, its battery still lasts for a few days of occasional use, with plenty of standby time.

Poupyrev also noted that the team focused on reducing cost, “in order to bring the technology into a price range where it’s more attractive to consumers.” The team also made lots of changes to the software that runs on the device and, more importantly, in the cloud to allow it to configure itself for every product it’s being used in and to make it easier for the team to add new functionality over time (when was the last time your jacket got a software upgrade?).

He actually hopes that over time, people will forget that Google was involved in this. He wants the technology to fade into the background. Levi’s, on the other hand, obviously hopes that this technology will enable it to reach a new market. The 2017 version only included the Levi’s Commuter Trucker Jacket. Now, the company is going broader with different styles.

“We had gone out with a really sharp focus on trying to adapt the technology to meet the needs of our commuter customer, which a collection of Levi’s focused on urban cyclists,” Paul Dillinger, the VP of Global Product Innovation at Levi’s, told me when I asked him about the company’s original efforts around Jacquard. But there was a lot of interest beyond that community, he said, yet the built-in features were very much meant to serve the needs of this specific audience and not necessarily relevant to the lifestyles of other users. The jackets, of course, were also pretty expensive. “There was an appetite for the technology to do more and be more accessible,” he said — and the results of that work are these new jackets.

Dillinger also noted that this changes the relationship his company has with the consumer, because Levi’s can now upgrade the technology in your jacket after you bought it. “This is a really new experience,” he said. “And it’s a completely different approach to fashion. The normal fashion promise from other companies really is that we promise that in six months, we’re going to try to sell you something else. Levi’s prides itself on creating enduring, lasting value in style and we are able to actually improve the value of the garment that was already in the consumer’s closet.”

I spent about a week with the Sherpa jacket before today’s launch. It does exactly what it promises to do. Pairing my phone and jacket took less than a minute and the connection between the two has been perfectly stable. The gesture recognition worked very well — maybe better than I expected. What it can do, it does well, and I appreciate that the team kept the functionality pretty narrow.

Whether Jacquard is for you may depend on your lifestyle, though. I think the ideal user is somebody who is out and about a lot, wearing headphones, given that music controls are one of the main features here. But you don’t have to be wearing headphones to get value out of Jacquard. I almost never wear headphones in public, but I used it to quickly tag where I parked my car, for example, and when I used it with headphones, I found using my jacket’s cuffs easier to forward to the next song than doing the same on my headphones. Your mileage may vary, of course, and while I like the idea of using this kind of tech so you need to take out your phone less often, I wonder if that ship hasn’t sailed at this point — and whether the controls on your headphones can’t do most of the things Jacquard can. Google surely wants Jacquard to be more than a gimmick, but at this stage, it kind of still is.

from Android – TechCrunch https://ift.tt/2miIpIK

via

IFTTT

(@rileytestut)

(@rileytestut)