For the past eighteen months, the iPad Pro has been my only machine away from home, and until recently, I was away from home a lot, traveling domestically and internationally to event locations around the world or our offices in San Francisco, New York and London. Every moment of every day that I wasn’t at my home desk, the iPad Pro was my main portable machine.

I made the switch on a trip to Brazil for our conference and Startup Battlefield competition (which was rad, by the way, a computer vision cattle scale won the top prize) on somewhat of a whim. I thought I’d take this one-week trip to make sure I got a good handle on how the iPad Pro would perform as a work device and then move back to my trusty 13” MacBook Pro.

The trip changed my mind completely about whether I could run TechCrunch wholly from a tablet. It turns out that it was lighter, smoother and more willing than my MacBook at nearly every turn. I never went back.

iPad Pro, 2018, Brazil

The early days were absolutely full of growing pains for both the iPad and myself. Rebuilding workflows by patching together the share sheet and automation tools and the newly introduced Shortcuts was a big part of making it a viable working machine at that point. And the changes that came with iPadOS that boosted slipover, split and the home screen were welcome in that they made the whole device feel more flexible.

The past year and a half has taught me a lot about what the absolute killer features of the iPad Pro are, while also forcing me to learn about the harsher trade-offs I would have to make for carrying a lighter, faster machine than a laptop.

All of which is to set the context for my past week with the new version of that machine.

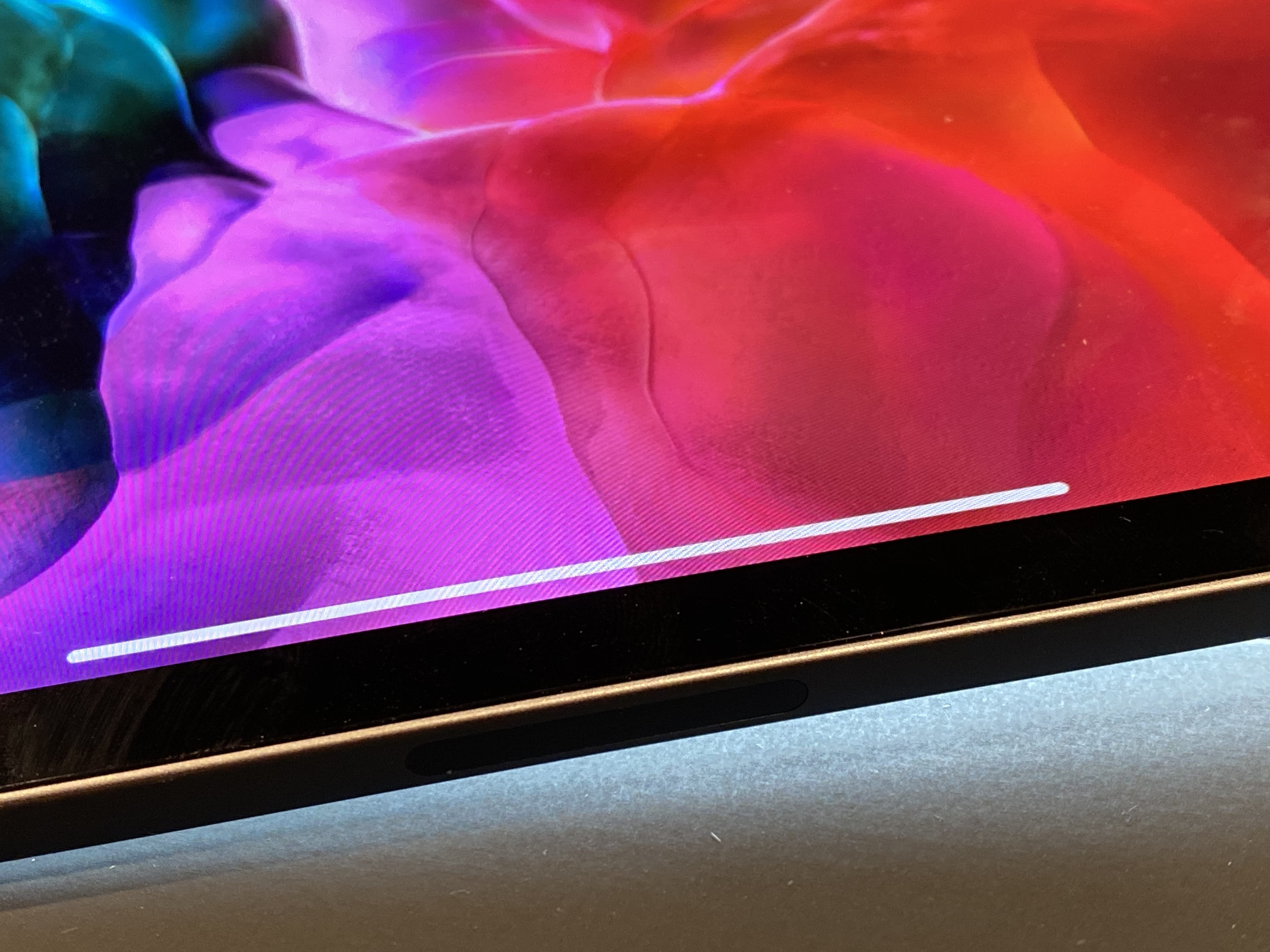

For the greater part, this new 2020 iPad Pro still looks much the same as the one released in 2019. Aside from the square camera array, it’s a near twin. The good news on that front is that you can tell Apple nailed the ID the first time because it still feels super crisp and futuristic almost two years later. The idealized expression of a computer. Light, handheld, powerful and functional.

The 12.9” iPad Pro that I tested contains the new A12Z chip which performs at a near identical level to the same model I’ve been using. At over 5015 single-core and over 18,000 multi-core scores in Geekbench 4, it remains one of the more powerful portable computers you can own, regardless of class. The 1TB model appears to still have 6GB of RAM, though I don’t know if that’s still stepped down for the lower models to 4GB.

This version adds an additional GPU core and “enhanced thermal architecture” — presumably better heat distribution under load but that was not especially evident given that the iPad Pro has rarely run hot for me. I’m interested to see what teardowns turn up here. New venting, piping or component distribution perhaps. Or something on-die.

It’s interesting, of course, that this processor is so close in performance (at least at a CPU level) to the A12X Bionic chip. Even at a GPU level Apple says nothing more than that it is faster than the A12X with none of the normal multipliers it typically touts.

The clearest answer for this appears to be that this is a true “refresh” of the iPad Pro. There are new features, which I’ll talk about next, but on the whole this is “the new one” in a way that is rarely but sometimes true of Apple devices. Whatever they’ve learned and are able to execute currently on hardware without a massive overhaul of the design or implementation of hardware is what we see here.

I suppose my one note on this is that the A12X still feels fast as hell and I’ve never wanted for power so, fine? I’ve been arguing against speed bumps at the cost of usability forever, so now is the time I make good on those arguments and don’t really find a reason to complain about something that works so well.

CamARa

The most evident physical difference on the new iPad Pro is, of course, the large camera array which contains a 10MP ultra wide and 12MP wide camera. These work to spec but it’s the addition of the new lidar scanner that is the most intriguing addition.

It is inevitable that we will eventually experience the world on several layers at once. The physical layer we know will be augmented by additional rings of data like the growth rings of a redwood.

In fact, that future has already come for most of us, whether we realize it or not. Right now, we experience these layers mostly in an asynchronous fashion by requesting their presence. Need a data overlay to tell you where to go? Call up a map with turn-by-turn. Want to know the definition of a word or the weather? Ask a voice assistant.

The next era beyond this one, though, is passive, contextually driven info layers that are presented to us proactively visually and audibly.

We’ve been calling this either augmented reality or mixed reality, though I think that neither one of those is ultimately very descriptive of what will eventually come. The augmented human experience has started with the smartphone, but will slowly work its way closer to our cerebellum as we progress down the chain from screens to transparent displays to lenses to ocular implants to brain-stem integration.

If you’re rolling your un-enhanced eyes right now, I don’t blame you. But that doesn’t mean I’m not right. Bookmark this and let’s discuss in 2030.

In the near term, though, the advancement of AR technology is being driven primarily by smartphone experiences. And those are being advanced most quickly by Google and Apple with the frameworks they are offering to developers to integrate AR into their apps and the hardware that they’re willing to fit onboard their devices.

One of the biggest hurdles to AR experiences being incredibly realistic has been occlusion. This is effect that allows one object to intersect with another realistically — to obscure or hide it in a way that tells our brain that “this is behind that.” Occlusion leads to a bunch of interesting things like shared experiences, interaction of physical and digital worlds and just general believability.

This is where the iPad Pro’s lidar scanner comes in. With lidar, two major steps forward are possible for AR applications.

- Initialization time is nearly instantaneous. Because lidar works at the speed of light, reading pulses of light it sends out and measuring their “flight” times to determine the shape of objects or environments, it is very fast. That typical “fire it up, wave it around and pray” AR awkwardness should theoretically be eliminated with lidar.

- Occlusion becomes an automatic. It no longer requires calculations be done using the camera, small hand movements and computer vision to “guess” at the shape of objects and their relationship to one another. Developers essentially get all of this for “free” computationally and at blazing speed.

There’s a reason lidar is used in many autonomous free roaming vehicle systems and semi-autonomous driving systems. It’s fast, relatively reliable and a powerful mapping tool.

ARKit 3.5 now supplies the ability to create a full topological 3D mesh of an environment with plane and surface detection. It also comes with greater precision than was possible with a simple camera-first approach.

Unfortunately, I was unable to test this system; applications that take advantage of it are not yet available, though Apple says many are on their way from games like Hot Lava to home furnishing apps like Ikea. I’m interested to see how effective this addition is to iPad as it is highly likely that it will also come to the iPhone this year or next at the latest.

One thing I am surprised but not totally shocked by is that the iPad Pro rear-facing camera does not do Portrait photos. Only the front-facing True Depth camera does Portrait mode here.

My guess is that there is a far more accurate Portrait mode coming to iPad Pro that utilizes the lidar array as well as the camera, and it is just not ready yet. There is no reason that Apple should not be able to execute a Portrait style image with an even better understanding of the relationships of subjects to backgrounds.

lidar is a technology with a ton of promise and a slew of potential applications. Having this much more accurate way to bring the outside world into your device is going to open a lot of doors for Apple and developers over time, but my guess is that we’ll see those doors open over the next couple of years rather than all at once.

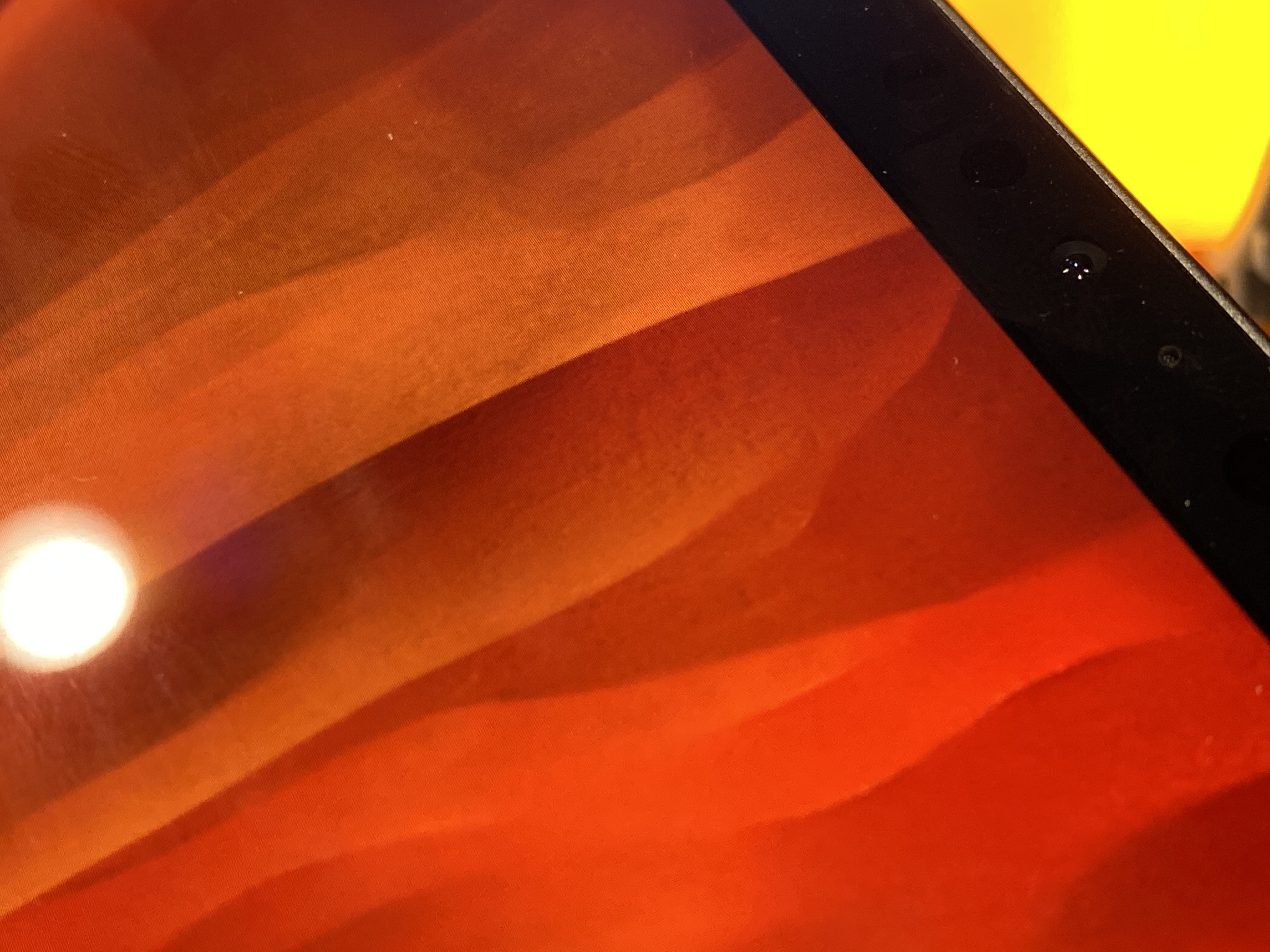

One disappointment for me is that the True Depth camera placement remains unchanged. In a sea of fantastic choices that Apple made about the iPad Pro’s design, the placement of the camera in a location most likely to be covered by your hand when it is in landscape mode is a standout poor one.

Over the time I’ve been using iPad Pro as my portable machine I have turned it to portrait mode a small handful of times, and most of those were likely because an app just purely did not support landscape.

This is a device that was born to be landscape, and the camera should reflect that. My one consideration here is that the new “floating” design of the Magic Keyboard that ships in May will raise the camera up and away from your hands and may, in fact, work a hell of a lot better because of it.

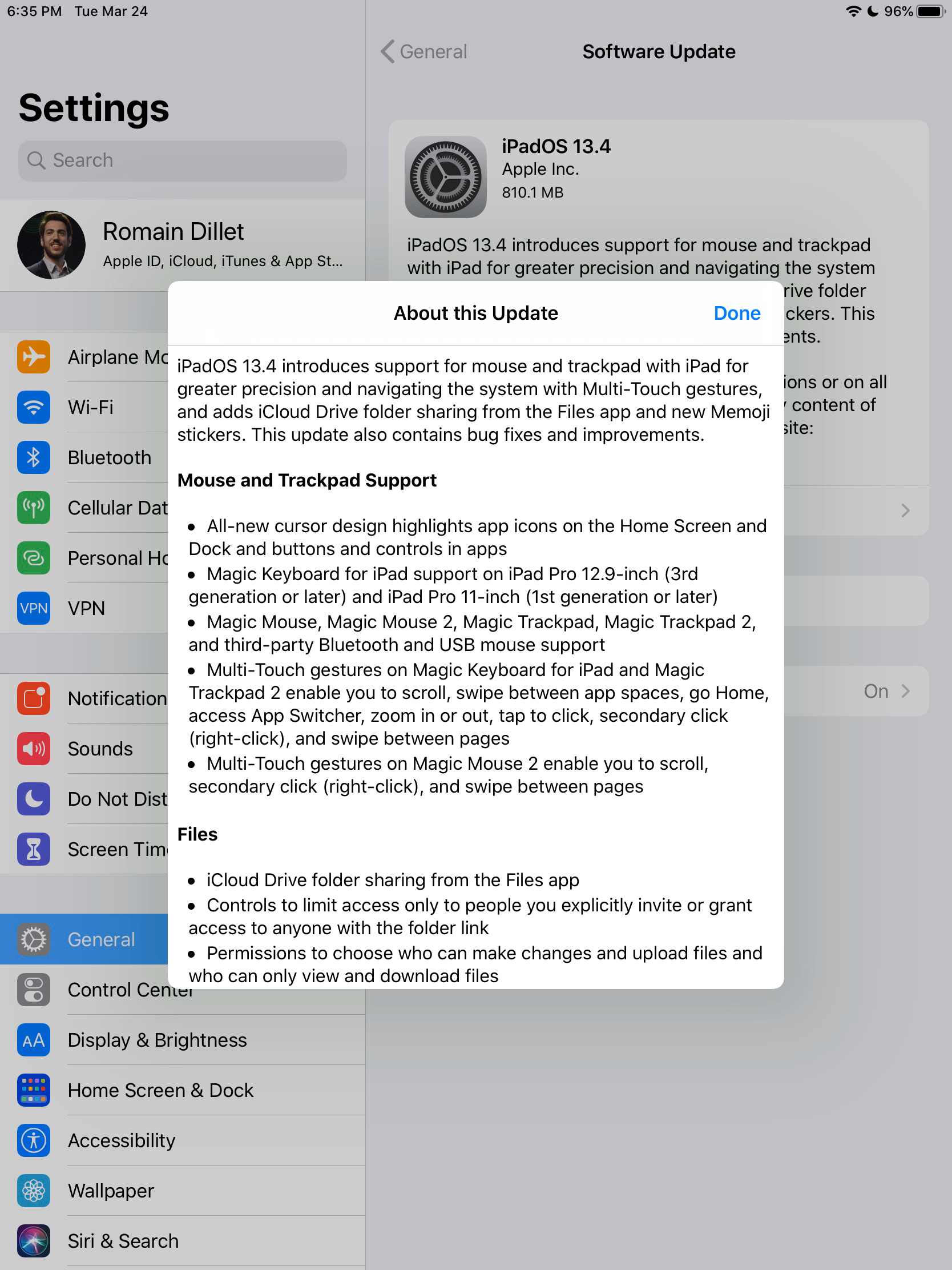

Keyboard and trackpad support

At this point, enough people have seen the mouse and trackpad support to have formed some opinions on it. In general, the response has been extremely positive, and I agree with that assessment. There are minor quibbles about how much snap Apple is applying to the cursor as it attaches itself to buttons or actions, but overall the effect is incredibly pleasant and useful.

Re-imagining the cursor as a malleable object rather than a hard-edged arrow or hand icon makes a ton of sense in a touch environment. We’re used to our finger becoming whatever tool we need it to be — a pencil or a scrubber or a button pusher. It only makes sense that the cursor on iPad would also be contextually aware as well.

I was only able to use the Magic Trackpad so far, of course, but I have high hopes that it should fall right into the normal flow of work when the Magic Keyboard drops.

And, given the design of the keyboard, I think that it will be nice to be able to keep your hands on the keyboard and away from poking at a screen that is now much higher than it was before.

Surface Comparisons

I think that with the addition of the trackpad to the iPad Pro there has been an instinct to say, “Hey, the Surface was the right thing after all.” I’ve been thinking about this at one point or another for a couple of years now as I’ve been daily driving the iPad.

I made an assessment back in 2018 about this whole philosophical argument, and I think it’s easiest to just quote it here:

One basic summary of the arena is that Microsoft has been working at making laptops into tablets, Apple has been working on making tablets into laptops and everyone else has been doing who knows what.

Microsoft still hasn’t been able (come at me) to ever get it through their heads that they needed to start by cutting the head off of their OS and building a tablet first, then walking backwards. I think now Microsoft is probably much more capable than then Microsoft, but that’s probably another whole discussion.

Apple went and cut the head off of OS X at the very beginning, and has been very slowly walking in the other direction ever since. But the fact remains that no Surface Pro has ever offered a tablet experience anywhere near as satisfying as an iPad’s.

Yes, it may offer more flexibility, but it comes at the cost of unity and reliably functionality. Just refrigerator toasters all the way down.

Still holds, in my opinion, even now.

Essentially, I find the thinking that the iPad has arrived at the doorstep of the Surface because the iPad’s approach was not correct to be so narrow because it focuses on hardware, when the reality is Windows has never been properly adjusted for touch. Apple is coming at this touch first, even as it adds cursor support.

To reiterate what I said above, I am not saying that “the Surface approach is bad” here so go ahead and take a leap on that one. I think the Surface team deserves a ton of credit for putting maximum effort into a convertible computer at the time that nearly the entire industry was headed in another direction. But I absolutely disagree that the iPad is “becoming the Surface” because the touch experience on the Surface is one of the worst of any tablet and the iPad is (for all of the interface’s foibles) indisputably the best.

It is one of the clearer examples of attempting to solve a similar problem from different ends in recent computing design.

That doesn’t mean, however, that several years of using the iPad Pro is without a flaw.

iPad Promise

Back in January, Apple writer and critic John Gruber laid out his case for why the iPad has yet to meet its full potential. The conclusions, basically, were that Apple had missed the mark on the multi-tasking portion of its software.

At the time, I believed a lot of really good points had been made by John and others who followed on and though I had thoughts I wasn’t really ready to crystalize them. I think I’m ready now, though. Here’s the nut of it:

The focus of the iPad Pro, its North Star, must be speed and capability, not ease of use.

Think about the last time that you, say, left your MacBook or laptop sitting for a day or two or ten. What happened when you opened it? Were you greeted with a flurry of alerts and notifications and updates and messages? Were you able to, no matter how long or short a time you had been away from it, open it and start working immediately?

With iPad Pro, no matter where I have been or what I have been doing, I was able to flip it open, swipe up and be issuing my first directive within seconds. As fast as my industry moves and as wild as our business gets, that kind of surety is literally priceless.

Never once, however, did I wish that it was easier to use.

Do you wish that a hammer is easier? No, you learn to hold it correctly and swing it accurately. The iPad could use a bit more of that.

Currently, iPadOS is still too closely tethered to the sacred cow of simplicity. In a strange bout of irony, the efforts on behalf of the iPad software team to keep things simple (same icons, same grid, same app switching paradigms) and true to their original intent have instead caused a sort of complexity to creep into the arrangement.

I feel that much of the issues surrounding the iPad Pro’s multi-tasking system could be corrected by giving professional users a way to immutably pin apps or workspaces in place — offering themselves the ability to “break” the multitasking methodology that has served the iPad for years in service of making their workspaces feel like their own. Ditch the dock entirely and make that a list of pinned spaces that can be picked from at a tap. Lose the protected status of app icons and have them reflect what is happening in those spaces live.

The above may all be terrible ideas, but the core of my argument is sound. Touch interfaces first appeared in the 70’s and have been massively popular for at least a dozen years by now.

The iPad Pro user of today is not new to a touch-based interface and is increasingly likely to have never known a computing life without touch interfaces.

If you doubt me, watch a kid bounce between six different apps putting together a simple meme or message to send to a friend. It’s a virtuoso performance that they give dozens of times a day. These users are touch native. They deserve to eat meat, not milk.

This device is still massively compelling, regardless, for all of the reasons I outlined in 2018 and still feel strongly about today. But I must note that there is little reason so far to upgrade to this from the 2018 iPad Pro. And given that the Magic Keyboard is backward compatible, it won’t change that.

If you don’t currently own an iPad Pro, however, and you’re wondering whether you can work on it or not, well, I can and I did and I do. Talking to 30 employees on multiple continents and time zones while managing the editorial side of a complex, multi-faceted editorial, events and subscription business.

I put 100,000 (airline) miles on the iPad Pro and never once did it fail me. Battery always ample; speed always constant; keyboard not exactly incredible but also spill sealed and bulletproof. I can’t say that of any laptop I’ve ever owned, Apple included.

I do think that the promise of the integrated trackpad and a leveling up of the iPad’s reason to be makes the Magic Keyboard and new iPad Pro one of the more compelling packages currently on the market.

I loved the MacBook Air and have used several models of it to death over the years. There is no way, today, that I would choose to go back to a laptop given my style of work. It’s just too fast, too reliable and too powerful.

It’s insane to have a multi-modal machine that can take typing, swiping and sketching as inputs and has robust support for every major piece of business software on the planet — and that always works, is always fast and is built like an Italian racing car.

Who can argue with that?

from iPhone – TechCrunch https://ift.tt/33J7wGb