South Korea has successfully slowed down the spread of coronavirus. Alongside widespread quarantine measures and testing, the country’s innovative use of technology is credited as a critical factor in combating the spread of the disease. As Europe and the United States struggle to cope, many governments are turning to AI tools to both advance the medical research and manage public health, now and in the long term: technical solutions for contact tracing, symptom tracking, immunity certificates and other applications are underway. These technologies are certainly promising, but they must be implemented in ways that do not undermine human rights.

Seoul has collected extensively and intrusively the personal data of its citizens, analyzing millions of data points from credit card transactions, CCTV footage and cellphone geolocation data. South Korea’s Ministry of the Interior and Safety even developed a smartphone app that shares with officials GPS data of self-quarantined individuals. If those in quarantine cross the “electronic fence” of their assigned area, the app alerts officials. The implications for privacy and security of such widespread surveillance are deeply concerning.

South Korea is not alone in leveraging personal data in containment efforts. China, Iran, Israel, Italy, Poland, Singapore, Taiwan and others have used location data from cellphones for various applications tasked with combating coronavirus. Supercharged with artificial intelligence and machine learning, this data cannot only be used for social control and monitoring, but also to predict travel patterns, pinpoint future outbreak hot spots, model chains of infection or project immunity.

Implications for human rights and data privacy reach far beyond the containment of COVID-19. Introduced as short-term fixes to the immediate threat of coronavirus, widespread data-sharing, monitoring and surveillance could become fixtures of modern public life. Under the guise of shielding citizens from future public health emergencies, temporary applications may become normalized. At the very least, government decisions to hastily introduce immature technologies — and in some cases to oblige citizens by law to use them — set a dangerous precedent.

Nevertheless, such data and AI-driven applications could be useful advances in the fight against coronavirus, and personal data — anonymized and unidentifiable — offers valuable insights for governments navigating this unprecedented public health emergency. The White House is reportedly in active talks with a wide array of tech companies about how they can use anonymized aggregate-level location data from cellphones. The U.K. government is in discussion with cellphone operators about using location and usage data. And even Germany, which usually champions data rights, introduced a controversial app that uses data donations from fitness trackers and smartwatches to determine the geographical spread of the virus.

Big tech too is rushing to the rescue. Google makes available “Community Mobility Reports” for more than 140 countries, which offer insights into mobility trends in places such as retail and recreation, workplaces and residential areas. Apple and Google collaborate on a contact-tracing app and have just launched a developer toolkit including an API. Facebook is rolling out “local alerts” features that allow municipal governments, emergency response organizations and law enforcement agencies to communicate with citizens based on their location.

It is evident that data revealing the health and geolocation of citizens is as personal as it gets. The potential benefits weigh heavy, but so do concerns about the abuse and misuse of these applications. There are safeguards for data protection — perhaps, the most advanced one being the European GDPR — but during times of national emergency, governments hold rights to grant exceptions. And the frameworks for the lawful and ethical use of AI in democracy are much less developed — if at all.

There are many applications that could help governments enforce social controls, predict outbreaks and trace infections — some of them more promising than others. Contact-tracing apps are at the center of government interest in Europe and the U.S. at the moment. Decentralized Privacy-Preserving Proximity Tracing, or “DP3T,” approaches that use Bluetooth may offer a secure and decentralized protocol for consenting users to share data with public health authorities. Already, the European Commission released a guidance for contact-tracing applications that favors such decentralized approaches. Whether centralized or not, evidently, EU member states will need to comply with the GDPR when implementing such tools.

Austria, Italy and Switzerland have announced they plan to use the decentralized frameworks developed by Apple and Google. Germany, after ongoing public debate, and stern warnings from privacy experts, recently ditched plans for a centralized app opting for a decentralized solution instead. But France and Norway are using centralized systems where sensitive personal data is stored on a central server.

The U.K. government, too, has been experimenting with an app that uses a centralized approach and that is currently being tested in the Isle of Wight: The NHSX of the National Health Service will allow health officials to reach out directly and personally to potentially infected people. To this point, it remains unclear how the data collected will be used and if it will be combined with other sources of data. Under current provisions, the U.K. is still bound to comply with the GDPR until the end of the Brexit transition period in December 2020.

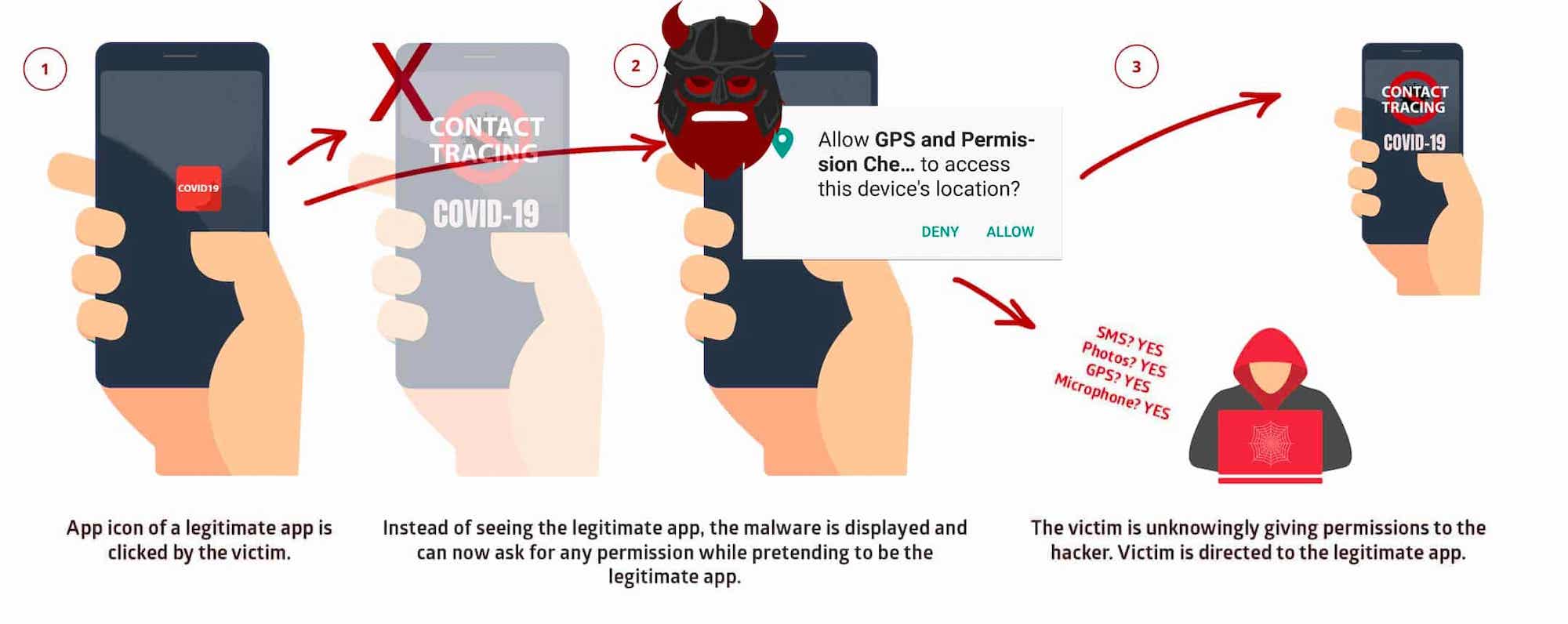

Aside from government-led efforts, worryingly, a plethora of apps and websites for contact tracing and other forms of outbreak control are mushrooming, asking citizens to volunteer their personal data yet offering little — if any — privacy and security features, let alone functionality. Certainly well-intentioned, these tools often come from hobby developers and often originate from amateur hackathons.

Sorting the wheat from the chaff is not an easy task, and our governments are most likely not equipped to accomplish it. At this point, artificial intelligence, and especially its use in governance, is still new to public agencies. Put on the spot, regulators struggle to evaluate the legitimacy and wider-reaching implications of different AI systems for democratic values. In the absence of sufficient procurement guidelines and legal frameworks, governments are ill-prepared to make these decisions now, when they are most needed.

And worse yet, once AI-driven applications are let out of the box, it will be difficult to roll them back, not unlike increased safety measures at airports after 9/11. Governments may argue that they require data access to avoid a second wave of coronavirus or another looming pandemic.

Regulators are unlikely to generate special new terms for AI during the coronavirus crisis, so at the very least we need to proceed with a pact: all AI applications developed to tackle the public health crisis must end up as public applications, with the data, algorithms, inputs and outputs held for the public good by public health researchers and public science agencies. Invoking the coronavirus pandemic as a sop for breaking privacy norms and reason to fleece the public of valuable data can’t be allowed.

We all want sophisticated AI to assist in delivering a medical cure and managing the public health emergency. Arguably, the short-term risks to personal privacy and human rights of AI wane in light of the loss of human lives. But when coronavirus is under control, we’ll want our personal privacy back and our rights reinstated. If governments and firms in democracies are going to tackle this problem and keep institutions strong, we all need to see how the apps work, the public health data needs to end up with medical researchers and we must be able to audit and disable tracking systems. AI must, over the long term, support good governance.

The coronavirus pandemic is a public health emergency of most pressing concern that will deeply impact governance for decades to come. And it also sheds a powerful spotlight on gaping shortcomings in our current systems. AI is arriving now with some powerful applications in stock, but our governments are ill-prepared to ensure its democratic use. Faced with the exceptional impacts of a global pandemic, quick and dirty policymaking is insufficient to ensure good governance, but may be the best solution we have.

from Apple – TechCrunch https://ift.tt/2M0GmT1